from Victor Gomes

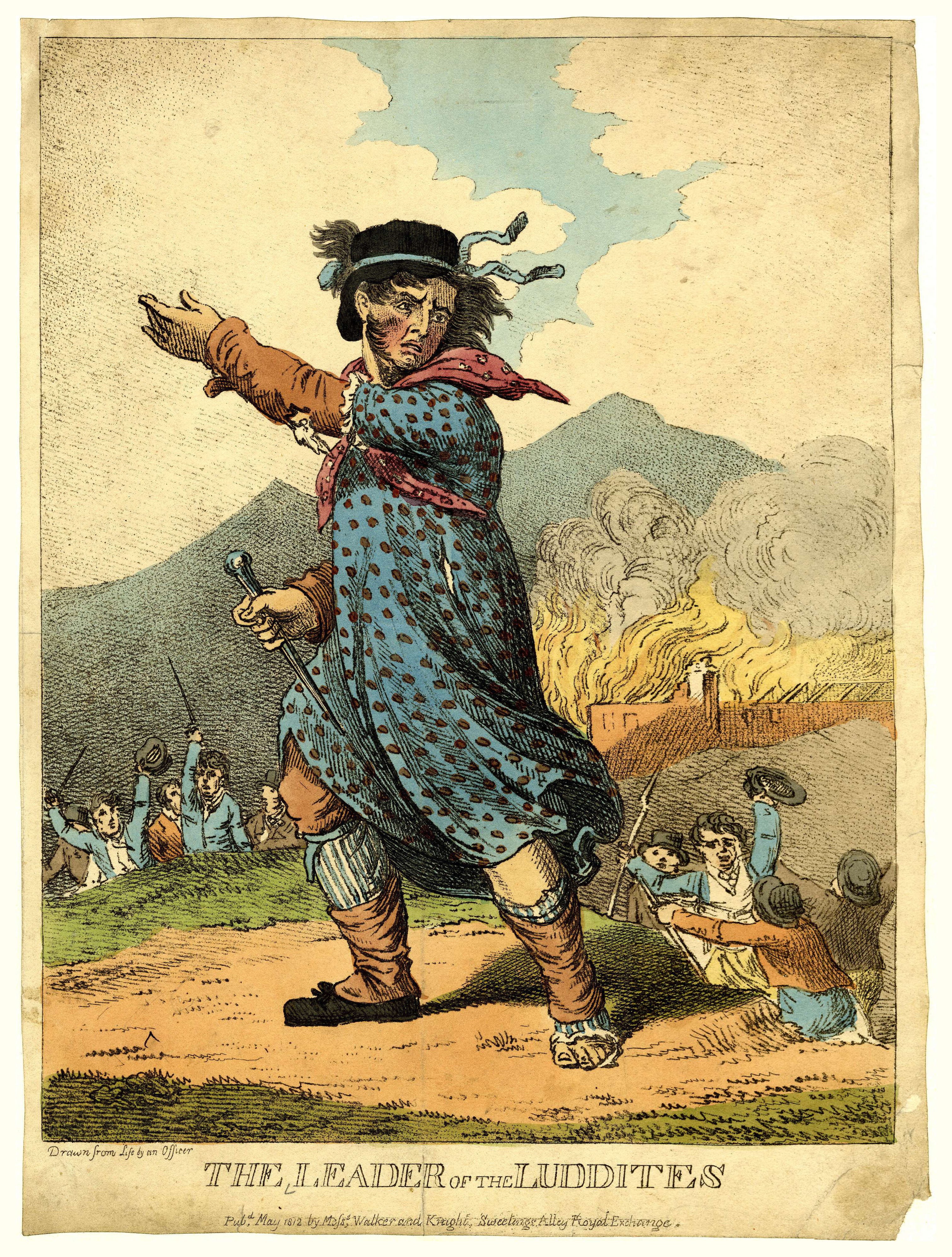

“Enoch” made them, Enoch shall break them.”

Contact:

neoluddite@victorgom.es

@notnaughtknot

Discord: Email neoluddite@victorgom.es for an invite!

Beggining Tuesday, June 27th

You may have played around with chatGPT, or perhaps even used DALL-e to generate images from text. Though Large Language Models have been around since 2018, they’re currently dominanting the news thanks to the public release of these newer, even larger models. While impressive, like many technologies, they come at a cost and introduce new risks. However, there are specific issues related to the sheer scale of these models and they highlight the dangers of machines which allow for the consolidation of labor.

These issues are especially concerning given the lack of regulation in the tech industry generally, and the tendency of productivity-increasing technology to further concentrate power in the hands of the few. This reading group will explore these risks and engage with how they work in the hopes of better organizing to protect the rights of workers and individuals. The goal is to have a better understanding of the costs (data, carbon, human labor) and risks (misinformation, unpredictability, bias) of making these machines, as well as limitations in what they can learn about the world primarily through text. We will begin with “Resisting AI: An Anti-fascist Approach to Artificial Intelligence” by Dan McQuillan, with additional optional readings. If you’d like to learn more about me, you can visit victorgom.es for a bio.

Schedule

The only expectation is that you read the chapter(s) of the book that’s listed for that meeting. I tried keeping it around 30 pages on average. Even if you don’t read the assigned chapters, you’re still welcome to participate as long as you are mindful.

For each chapter, I’ve also noted some optional readings for context, so I’d suggest checking those out rather than reading ahead in the book! Optional readings are by no means required, but they may add to and deepen our conversation. They’re a mix of news articles, essays, and journal articles, so you can pick from type of text is most engaging to you. They pull from a range of disciplines and schools of thought, which I hope serves to highlight and survey how many different people conceptualize issues with AI, though it is by no means exhaustive. If you ever find yourself struggling, reach out! Either directly or over discord to the group.

I’d suggest you read this before anything else for some historical context: What the Luddites Really Fought Against by Richard Conniff. The Luddites will also comes up in Chapter 6.

- Meeting 1 (June 27th): Resisting AI

- Introduction

- 1. Operations of AI

- Deep Learning: A Critical Appraisal by Gary Marcus

- On the Dangers of Stochastic Parrots, Can Language Models Be Too Big? by Emily Bender, Timnit Gebru, Angelina McMillan-Major, & Shmargaret Shmitchell

- Stop feeding the hype and start resisting by Iris van Rooij

- Meeting 2 (July 11th): Resisting AI

- 2. Collateral Damage

- Ethical and social risks of harm from Language Models by Laura Weidinger et al.

- OpenAI Used Kenyan Workers on Less Than $2 Per Hour by Billy Perigo

- Weizenbaum examines computers and society by Diana ben-Aaron

- 3. AI Violence

- Physiognomy’s New Clothes by Blaise Agüera y Arcas, Margaret Mitchell, & Alexander Todorov

- Will A.I. Become the New McKinsey? by Ted Chiang

- Large Datasets, A Pyrrhic Win for Computer Vision? by Vinay Uday Prabhu & Abeba Birhane

- Autism and the making of emotion AI: Disability as resource for surveillance capitalism by Jeff Nagy

- 2. Collateral Damage

- Meeting 3 (July 25th): Resisting AI

- 4. Necropolitics

- The Values Encoded in Machine Learning Research by Abeba Birhane et al.

- Machine Bias: Risk Assessments in Criminal Sentencing by Julia Angwin, Jeff Larson, Surya Mattu, & Lauren Kirchner

- How We Analyzed the COMPAS Recidivism Algorithm by Julia Angwin, Jeff Larson, Surya Mattu, & Lauren Kirchner

- Homeland Security Uses AI Tool to Analyze Social Media of U.S. Citizens and Refugees by Joseph Cox

- Algorithmic Colonization of Africa by Abeba Birhane

- 4. Necropolitics

- Meeting 4 (August 8th): Resisting AI

- 5. Post-Machine Learning

- Pygmalion Displacement, When Humanising AI Dehumanises Women by Lelia Erscoi, Annelies Kleinherenbrink, & Olivia Guest

- Everyone should decide how their digital data are used – not just tech companies by Jathan Sadowski, Salomé Viljoen, & Meredith Whittaker

- What is the point of fairness? Disability, AI, and the Complexity of Justice by Cynthia L. Bennet & Os Keyes

- Automating autism: Disability, discourse, and Artificial Intelligence by Os Keyes

- 6. People’s Council

- Robot Rights? Let’s Talk about Human Welfare Instead by Abeba Birhane & Jelle van Dijk

- Effective Altruism Is Pushing a Dangerous Brand of ‘AI Safety’ by Timnit Gebru

- Notes toward a Neo-Luddite Manifesto by Chellis Glendinning

- 5. Post-Machine Learning

- Meeting 5 (August 22nd): Resisting AI

- 7. Anti-Fascist AI

- Towards decolonising computational sciences by Abeba Birhane & Olivia Guest

- Algorithmic Injustices, Towards a Relational Ethics by Abeba Birhane & Fred Cummins

- On Logical Inference over Brains, Behaviour, and Artificial Neural Networks by Olivia Guest & Andrea Martin

- 7. Anti-Fascist AI

Additional resources

- Potential Future Reads

- Race After Technology by Ruja Benjamin

- The Human Use of Human Beings by Norbert Weiner

- Blood in the Machine: The Origins of Rebellion Against Big Tech by Brian Merchant

- Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor by Virginia Eubanks

- Questioning Technology: Tool, Toy or Tyrant by John Zerzan & Alice Carnes

- Technofix: Why Technology Won’t Save Us or the Environment by Michael Huesemann & Joyce Huesemann

- Audio/Video Media

- Links to more links

- Critical Lenses on AI by Iris van Rooij

- A Luddite Library by LibrarianShipwreck

- The brittleness of AI

- Poisoning Language Models During Instruction Tuning by Alexander Wan, Eric Wallace, Sheng Shen, & Dan Klein

- Intriguing properties of neural networks by Christian Szegedy et al.

- Explaining and Harnessing Adverserial Examples by Ian J. Goodfellow, Jonathon Shlens, & Christian Szegedy

- Adversarial Patch by Tom B. Brown et al.

- Researchers collect confusing images to expose the weak spots in AI vision by James Vincent

- More on Luddites

- I’m a Luddite. You should be one too by Jathan Sadowski

- Is It O.K. To Be A Luddite? by Thomas Pynchon

- Byron Was One of the Few Prominent Defenders of the Luddites by Kat Eschner

- Rage Against the Machines by Ronald BaileyThis is for a critical take on the Luddites (and colloquial uses of the term to just mean against technology period), but I feel it’s a good example of how luddites are often thought of.

- Poems

- Song for The Luddites by Lord Byron

- A Luddite Lullaby by Don Bogen

- All Watched Over by Machines of Loving Grace by Richard Brautigan

- I Am the People, the Mob by Carl Sandburg

- They Say This Isn’t a Poem by Kenneth Rexroth

- Under Which Lyre by W.H. Auden

- When I Heard The Learn’d Astronomer by Walt Whitman